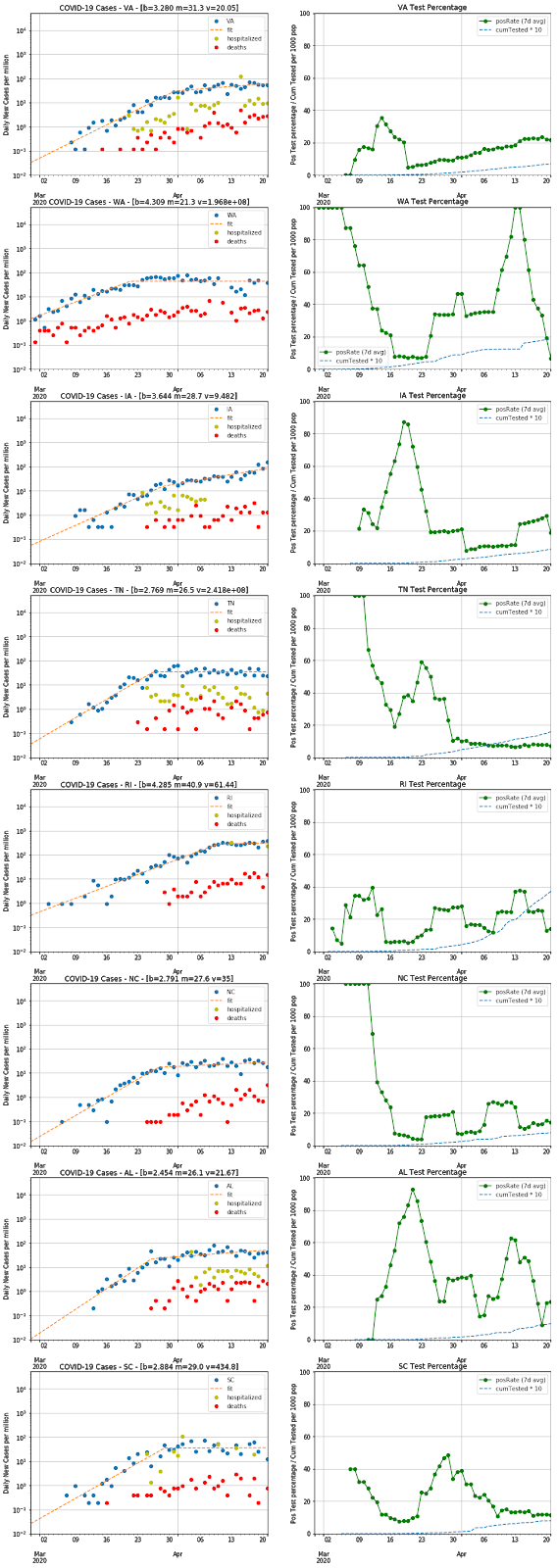

Two weeks after my previous post, there are now more data available and it's

pretty clear that the stay-at-home measures result in a significant

flattening of the exponential growth of infections. Even areas with

less-restrictive measures (like Iowa or Arkansas) show some flattening of

infection growth, which presumably means that every little bit of action

people take helps to reduce the transmission of this virus.

I updated my charts with the latest data from the

COVID Tracking Project and

added fits to a 2-piece exponential with a bend in the middle at some date. I thought it would be interesting to see what conclusions one could draw from these fits, but it's not clear that there are any really definitive trends. Still, I thought it worth posting in case someone else might spot some insights in these data.

Methodology

As discussed in the previous post, fitting the positive case rate is not

ideal, since there could well be trends caused by changes in the testing

rates or testing schemes. There is much less ambiguity in the death-rate

trends, but these lag by a week or two and they often have much more

statistical noise (making for harder fits). Some states are reporting

hospitalization rates and those could potentially be a more solid statistic

to fit, but the reporting on this is still very spotty (as you can see).

Therefore, I have stuck with fitting the case-rate, while plotting the other

two numbers so that one can see how well they track one another. If you see

the case-rate and death-rate diverge (not simply due to the time-lag), then

you have good reason to suspect changes in the testing regime.

I have used a 4-parameter piece-wise function and fit minimizing the

least-squares of the difference in logs of the data and fit function. Not

all of the data yielded good fits, but I haven't noted this (although you

can see the problematic ones in the charts). In some cases this is due to

shapes not fitting the model, like states that haven't yet flattened out or

ones like PA (and perhaps IL) that may have more of a 3-legged-exponential

shape. The charts below label the inflection point as "m", the

doubling-time of the earlier exponential as "b", and the doubling-time of

the latter exponential as "v".

Observations

From these charts, one can see that the infection slope has generally been

reduced significantly in most states sometime around the latter half of

March. Many states now have slopes that are nearly flat (you should

consider any fit with v > 20 as flat), but some are still growing at

doubling-times of 7-14 days (GA, MD, AL, KY, MS, NM). Those still growing

are a mix of states with strict stay-at-home policies and ones with laxer

policies, so there must be other factors at play (e.g. population density

and cultural behavior). In addition, there are states that haven't yet

flattened but are still growing relatively slowly (DE, SD, PR, NE, and

perhaps RI).

The only states showing a decline in case-rate are WA and LA. It makes

sense that WA had the earliest infections, so they might be the first to see

a decline. The LA curve has an unusual shape that perhaps indicates changes

in how many tests are administered, but the death-rate does seem to have

flattened out so perhaps the decline is real. There are hints of a decline

in NY, PA, and IN, but too few data points that don't quite make a clear

trend yet. The NY data looked like a decline until today's (Apr 15) data

point showed up.

At present, COVID Tracking has no data for AS (American Somoa) and very

little for MP, GU, VI. In addition, the statistics on the 8 smallest states

(AK, ND, MT, HI, ME, WV, VT, WY) are probably too low for good confidence in

any fit parameters. It might be helpful to chart all these data normalized

by state population (to see the true prevalence of infection), but that

would not help the statistical challenge of these small states.

Another interesting question is whether states in warmer climates have lower

infection rates, which might have an implication for whether COVID-19 will

show significant seasonality. In particular, the rapid flattening in FL

(despite lax shelter-in-place policies) and the relatively quick downward

trend in case-rate in LA in comparison to the mid-atlantic states suggested

this hypothesis. However, I think the high growth rate in MS and the slower

growth rates in many colder-climate states argue against this idea.

Fits by state

| State |

b |

m |

v |

State Name |

| AK |

3.726 |

24.7 |

1.056e+08 |

Alaska |

| AL |

2.578 |

25.0 |

12.56 |

Alabama |

| AR |

2.708 |

20.4 |

15.9 |

Arkansas |

| AS |

0.000 |

0.0 |

0 |

American Somoa |

| AZ |

2.674 |

26.0 |

61.4 |

Arizona |

| CA |

3.428 |

28.0 |

69.16 |

California |

| CO |

3.071 |

26.0 |

1623 |

Colorado |

| CT |

2.089 |

26.8 |

19.17 |

Connecticut |

| DC |

3.994 |

31.7 |

69.85 |

District of Columbia |

| DE |

3.711 |

26.0 |

6.014 |

Delaware |

| FL |

2.484 |

27.0 |

70.47 |

Florida |

| GA |

2.485 |

23.9 |

13.74 |

Georgia |

| GU |

11.780 |

24.0 |

89.11 |

Guam |

| HI |

3.913 |

24.3 |

2.513e+07 |

Hawaii |

| IA |

3.651 |

29.0 |

14.16 |

Iowa |

| ID |

2.972 |

28.5 |

1.537e+08 |

Idaho |

| IL |

1.879 |

21.5 |

10.04 |

Illinois |

| IN |

2.486 |

29.2 |

2.502e+07 |

Indiana |

| KS |

3.397 |

29.3 |

3.515e+05 |

Kansas |

| KY |

3.660 |

28.0 |

10.54 |

Kentucky |

| LA |

2.129 |

25.0 |

276.3 |

Louisiana |

| MA |

3.066 |

28.5 |

15.03 |

Massachusetts |

| MD |

2.870 |

28.0 |

10.25 |

Maryland |

| ME |

2.963 |

20.3 |

26.41 |

Maine |

| MI |

2.486 |

15.4 |

23.57 |

Michigan |

| MN |

2.767 |

21.3 |

21.61 |

Minnesota |

| MO |

1.951 |

26.6 |

191.5 |

Missouri |

| MP |

0.000 |

0.0 |

0 |

Northern Mariana Islands |

| MS |

1.672 |

20.9 |

13.66 |

Mississippi |

| MT |

3.954 |

26.0 |

123.9 |

Montana |

| NC |

2.791 |

26.6 |

44.33 |

North Carolina |

| ND |

2.923 |

19.6 |

15.91 |

North Dakota |

| NE |

9.018 |

15.0 |

5.8 |

Nebraska |

| NH |

4.239 |

31.0 |

942.3 |

New Hampshire |

| NJ |

1.881 |

25.5 |

43.08 |

New Jersey |

| NM |

4.514 |

29.9 |

12.6 |

New Mexico |

| NV |

3.081 |

27.5 |

2.2e+07 |

Nevada |

| NY |

1.963 |

22.6 |

34.56 |

New York |

| OH |

2.240 |

24.8 |

29.2 |

Ohio |

| OK |

2.615 |

27.6 |

80.67 |

Oklahoma |

| OR |

4.097 |

26.0 |

528.7 |

Oregon |

| PA |

2.403 |

28.7 |

26.71 |

Pennsylvania |

| PR |

9.094 |

72.2 |

10.13 |

Puerto Rico |

| RI |

4.086 |

30.0 |

5.703 |

Rhode Island |

| SC |

2.875 |

28.2 |

5.332e+07 |

South Carolina |

| SD |

23.811 |

16.0 |

4.543 |

South Dakota |

| TN |

2.769 |

25.7 |

1032 |

Tennessee |

| TX |

2.831 |

31.2 |

65.69 |

Texas |

| UT |

2.454 |

23.4 |

87.3 |

Utah |

| VA |

3.280 |

30.3 |

20.3 |

Virginia |

| VI |

2.627 |

20.0 |

4761 |

Virgin Islands |

| VT |

3.090 |

24.0 |

2470 |

Vermont |

| WA |

4.443 |

21.3 |

1.646e+08 |

Washington |

| WI |

1.654 |

18.7 |

21.41 |

Wisconsin |

| WV |

2.180 |

27.1 |

36.02 |

West Virginia |

| WY |

5.308 |

31.0 |

110.3 |

Wyoming |

Notes

As before, my Jupyter notebook

file for this analysis is available for

anyone who might be interested. Please send me comments and suggestions

that occur to you.